Neural Networks

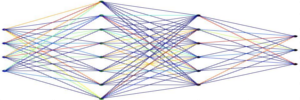

Neural networks can be used to solve a variety of problems that are difficult to solve in other fashions. The approach uses supervised learning. The basic motif is to gather a set of inputs and a set of target outputs and the network builds a bridge between the two. Such networks are chiefly used to solve non-linear problems.

Neural networks are roughly based on biological metaphors for nerves. The neural network accepts inputs and applies a weighting system to calculate a series of intermediate values stored in what are termed nodes or neurons. To extend the biological metaphor, the value may be sufficient to cause the neuron to fire or activate, resulting in an activation value from the neuron that is greater than the sum of the weights received by the neuron. Because of this activation feature a non-linear result is possible.

By comparing the outputs to the target values, an error gradient is created. Using a technique call back propagation, the weights of the nodes leading up to the output are proportionally adjusted according to the error curve slope. By successively cycling inputs and targets, and adjustments to the weights, the network weights are slowly shifted so that outputs of the network more closely mirror the desired target values.

Implementation

This implementation supports various kinds of network structures and neuron types:

Network structures:

- Multiple hidden layers

- Sparse networks

- Skip layer connections

- Recurrent networks

Neuron types:

- Sigmoidal, tanh, and linear activation functions

- Activation functions can be set by node or layer

- Easily subclassed custom activation functions

Processing:

- Test input can be randomly processed for each epoch